Few-Shot Meta-Learning and Transfer Learning

Remote sensing models often fail when moved to a new region, new sensor, or new task—even if the label name stays the same (“urban”, “forest”, “water”).

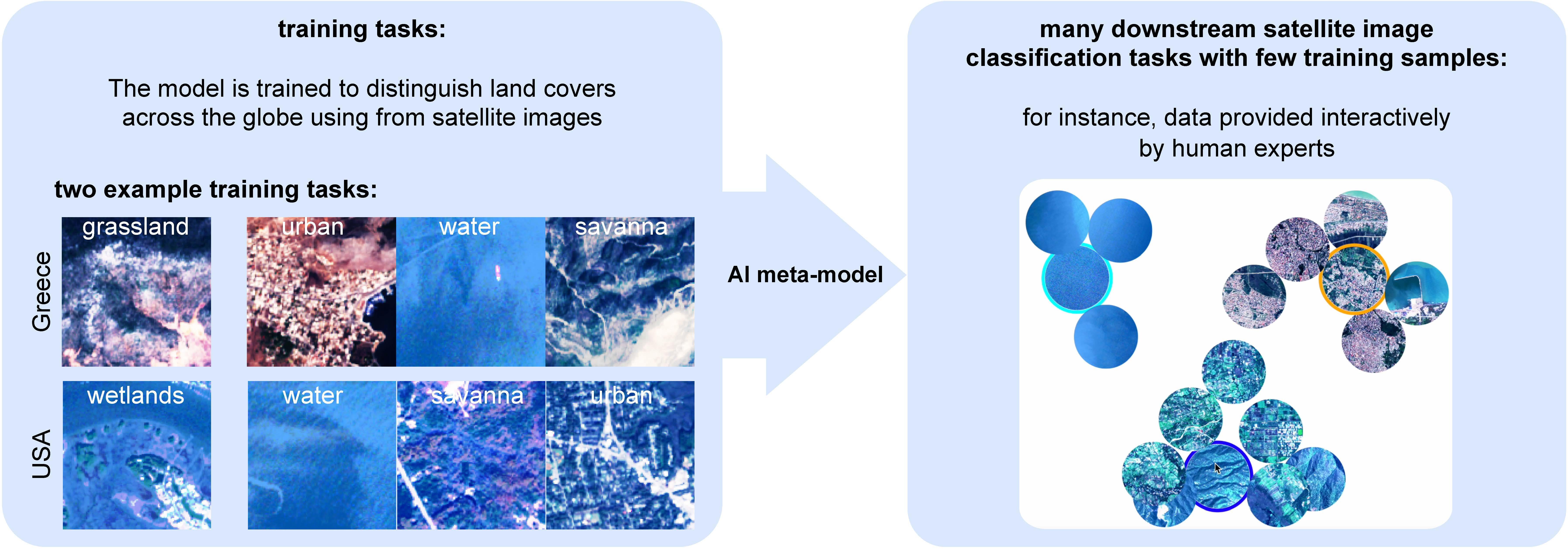

Meta-learning tackles this by training models to adapt quickly: instead of requiring thousands of new labels, the model can learn a new situation from just a few examples (“few-shot”).

Image credit: EPFL / Cécilia Carron (CC BY-SA 4.0).

Image credit: EPFL / Cécilia Carron (CC BY-SA 4.0).

Why few-shot meta-learning for land cover?

Land cover looks different across the planet (architecture, vegetation seasonality, soil/backgrounds, haze, viewing geometry). Few-shot meta-learning treats this as an adaptation problem: learn how to learn, so the model can update itself rapidly for a new region or dataset.

Key papers & main contributions

Meta-learning to address diverse Earth observation problems across resolutions (Communications Earth & Environment, 2024)

- Introduces METEOR, a meta-learning framework designed to adapt across diverse Earth observation tasks, including changes in resolution, spectral bands, and label spaces.

- Demonstrates strong few-shot adaptation: the system can be retrained for new applications using only a handful of high-quality examples.

- Shows a practical path toward one foundation “meta-model” that can be quickly specialized to new EO problems instead of training separate models from scratch.

“Chameleon AI” explainer + visuals (EPFL news)

EPFL news article: Chameleon AI program classifies objects in satellite images faster

- Provides an accessible overview of the METEOR idea and why it matters for EO settings where labeled data is scarce.

- Highlights example downstream tasks spanning very different domains (e.g., ocean debris, deforestation, urban structure, change after disasters) to motivate task-to-task transfer.

Meta-Learning for Few-Shot Land Cover Classification (EarthVision @ CVPRW, 2020 — Best Paper Award)

- Formulates geographic diversity as a few-shot transfer problem: adapt a land-cover model to a new region with only a few labeled samples.

- Evaluates MAML-style optimization-based meta-learning for both classification and segmentation and shows benefits especially when source and target domains differ.

- Provides early evidence that learning-to-adapt can outperform standard “pretrain + finetune” pipelines under real-world distribution shift.

Also available via the CVF open-access workshop proceedings: